Behind the chatbot smile: what you don't see matters too

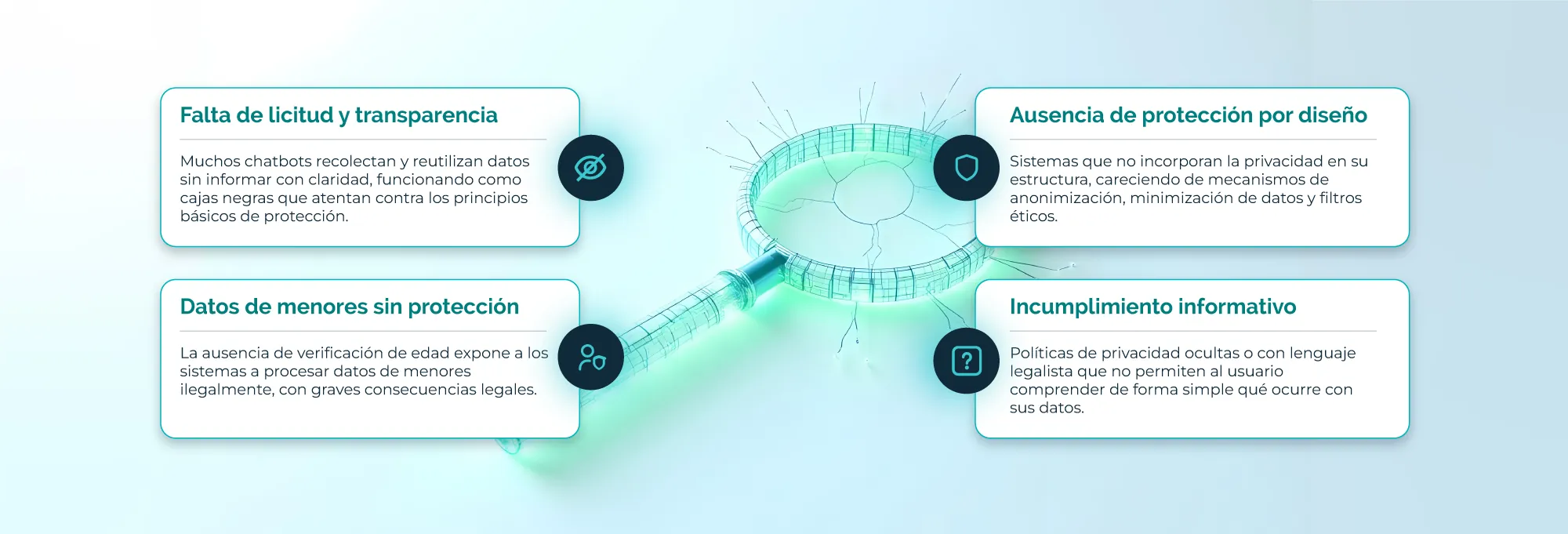

For example, many conversational assistants still operate as black boxes: they collect information without explaining how it will be used. Some do not even distinguish between an adult and a minor, allowing interactions without adequate filters. These problems are not technical; they are human. And they must be solved by design.

Many language models continue to learn from users, even in sensitive contexts such as health or education. Although they may seem harmless, they can infer emotions, routines or habits with just a few sentences.

A study by the Center for Humane Technology in 2023 warned that misconfigured systems can generate inappropriate responses to vulnerable users. It is not artificial malice, but a lack of ethical design.

When AI converses, it also infers. Therefore, the differential is not in having a faster chatbot, but in one that respects better.